The emergence of GenAI is revealing a fundamental flaw to the assessment of writing as large language models (LLMs) are capable of instantly generating text that is indistinguishable from a human (Nikoli et al., 2024). Human-centred design recommends that one should “start by trying to understand what the real issues are” (Norman, 2013, p. 218), and this is the very process that I engage with. Can we identify what the real issues are in assessing undergraduate students’ writing in world with LLMs

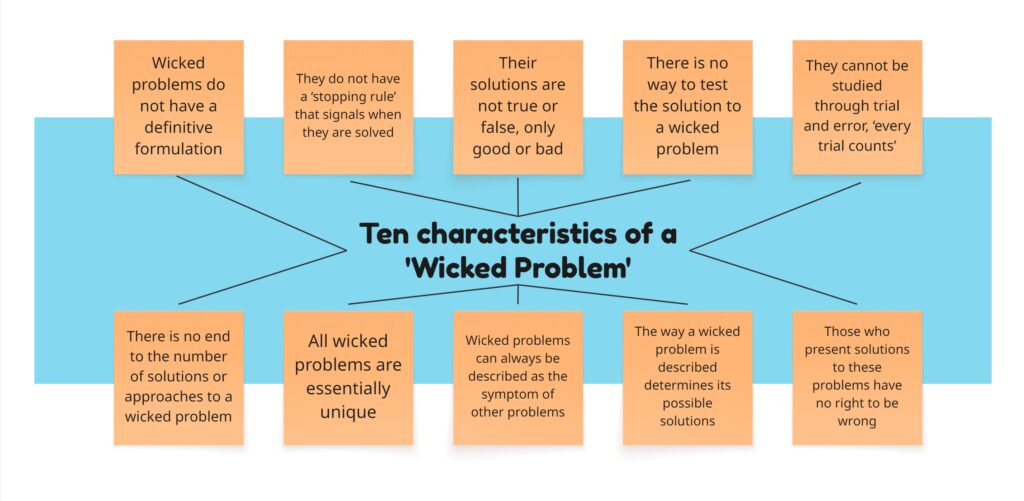

Corbin et al. (2025), call the topic of assessment amidst GenAI a ‘wicked problem’, which among many things “cannot be clearly or conclusively defined” (p. 5). This problem of GenAI and assessment means “different things to different people and these varying definitions pull solutions in contradictory directions” (Corbin et al., 2025, p. 5). Human-centred design focuses on the people (Norman, 2018) and this may involve considering vast demographic, psychographic, and behaviours with further expansive needs, goals, and motivations. These varied perspectives result in a disagreement of what the very problem of GenAI and assessments is; making a singular solution impossible (Corbin et al., 2025).

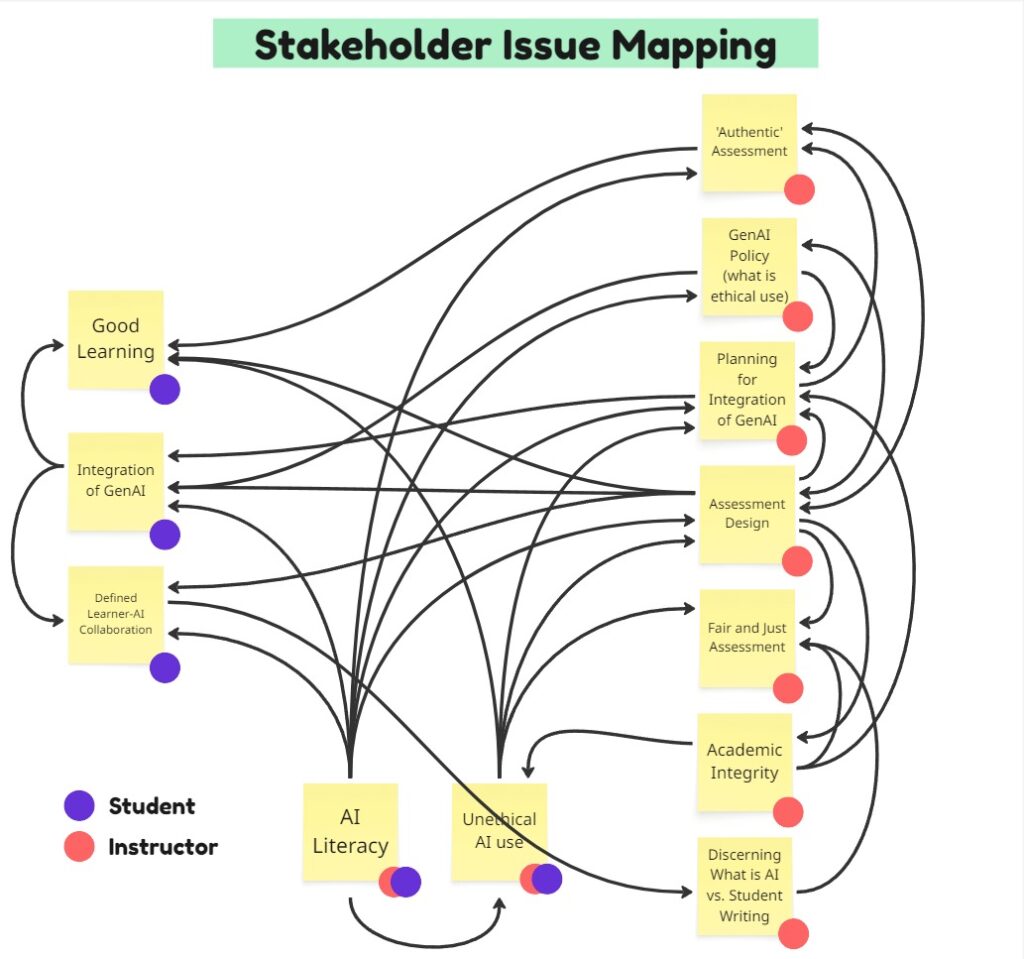

Students and instructors are the two primary stakeholder groups of this problem space. Students, as supporting their education is the overarching why of assessments and they are the ones engaging with the assessment. Instructors, as they are responsible for creating and grading assessments.

Methods of Problem Definition

Through empathy mapping, I have attempted to determine the real issues through capturing various perspectives from a variety of peer-reviewed articles and generated conversation within the stakeholder groups. Empathy mapping allows for a variety of perspectives to be captured and later analyzed to better inform the problem definition. Data points for my process of empathy mapping included: census data (Brunet & Galarneau, 2022) and COU (2018) to inform demographics, a research study by Srivastava et al. (2003) to inform psychographics, the EduFlow AI case study (Delanoy, 2025) to inform psychographics, behaviours, needs, goals, motivations, and what users ‘say’, and numerous ‘discussions’ generated by the large language model (LLM), ChatGPT 5 (OpenAI, 2025) to inform what users ‘say’.

LLMs, with the capability of generating text from large and diverse training data, has the potential to offer a variety of perspectives. However, every LLM is biased and highly dependent on the user’s prompt; a user which may unknowingly introduce bias. To encourage a variety of perspectives, I utilized ChatGPT 5 to generate fake discussions amongst students and teachers that have a variety of technical, writing, and AI ability, as well as a diversity of ages, and educational subject area. To explore the breadth of the problem space, I had to prompt ChatGPT 5 for it, thus limiting the breadth of ideas to me, the user. You can view the ChatGPT 5 prompts and resulting ‘discussions’ and ‘participant’ profiles on my Miro board (linked below).

Issues

I grouped the range of issues under distinct and clearly defined headings:

- Meaningful Learning

- Academic Integrity

- Assessment Design

- Limited Teacher Resources

- Integration of GenAI

- Planning for Integration of GenAI

- AI Literacy

- Unethical AI use

- ‘Authentic’ Assessment

- GenAI Policy (what is ethical use)

- Discerning What is AI vs. Student Writing

- Defined Learner-AI Collaboration

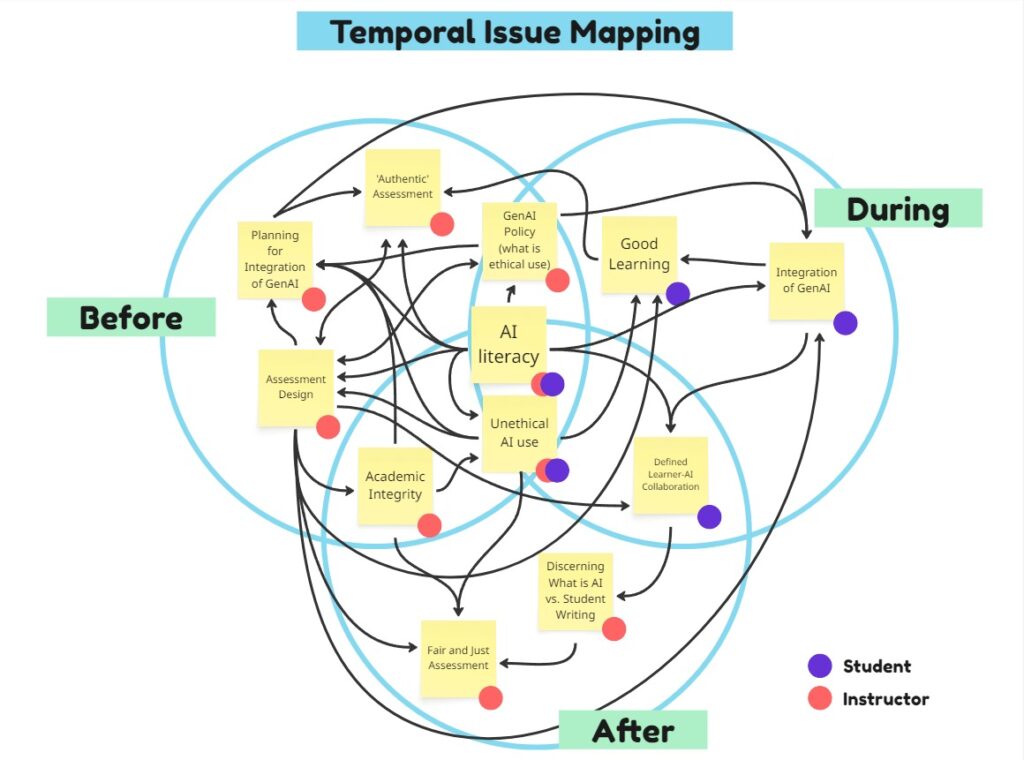

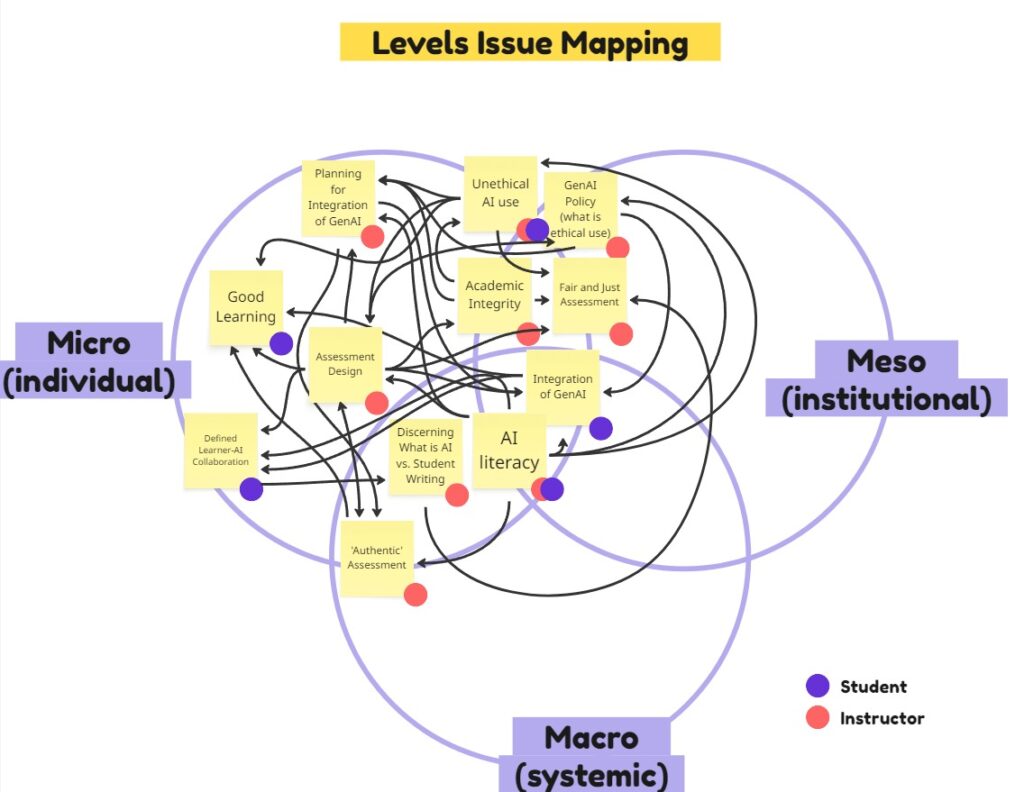

Issue Mapping

I mapped the variety of issues against three different categorizations in an attempt to better understand the network of issues. This allows for exploration of two key human-centred design principles: 1. find the right problem, not just the symptom of the problem and 2. consider the interconnected system since optimization of one part does not always benefit the system as a whole (Norman, 2018). A quick note: that the issues, relationships, and categorizations are still emerging; this is simply a subjective attempt.

| Categorization | Subcategories | Description |

|---|---|---|

| Stakeholder | student and teacher | who may be associated with each issue and how issues affect each other and the associated stakeholder group |

| Temporally | before, during, and after the assessment | when issues may present and influence other issues in the assessment process |

| Systemic Levels | micro (individual), meso (institution), and macro (systemic) | where issues may present and influence other issues across the systemic levels |

Mapping Issues of Assessing Writing Categorized by Stakeholder

Mapping Issues of Assessing Writing Categorized Temporally: Before, During, and After the Assessment

Mapping Issues of Assessing Writing Categorized by Systemic Level: Micro (Individual), Meso (Institution), and Macro (Systemic)

Discussion

The network of interconnected issues does not reveal a clear problem; instead, they reveal numerous interconnected problems, where each problem can be described as a symptom of another, in-line with the wicked problem definition (Corbin et al., 2025). Unfortunately, this makes the design process more challenging and potentially misguided as human-centred design recommends finding the source of the problem, instead of just a symptom (Norman, 2013). So then, where does one start, especially knowing that how we define the problem determines its possible solutions (Corbin et al., 2025; Norman, 2013)? I attempt to explore the problem definition by starting at specific issue or subcategory and following the thread to understand the implications of that starting point.

Macro

If we are to start on the macro (systemic) level, then our design will be reactive to the systemic environment we are a part of. This may be a good choice as instructors are unable to influence the broader environment and tools of our culture have an impact on learning. It is very difficult to remove accessible tools, such as GenAI, from our schools and doing so would result in unauthentic education. So, beginning at the macro level, we would acknowledge GenAI as an important component of the media ecology and highlight AI literacy and the integration of GenAI as fundamental to the assessment of writing. We may define the problem as: ‘How can we assess a GenAI-learner collaboration in writing?’

Meso

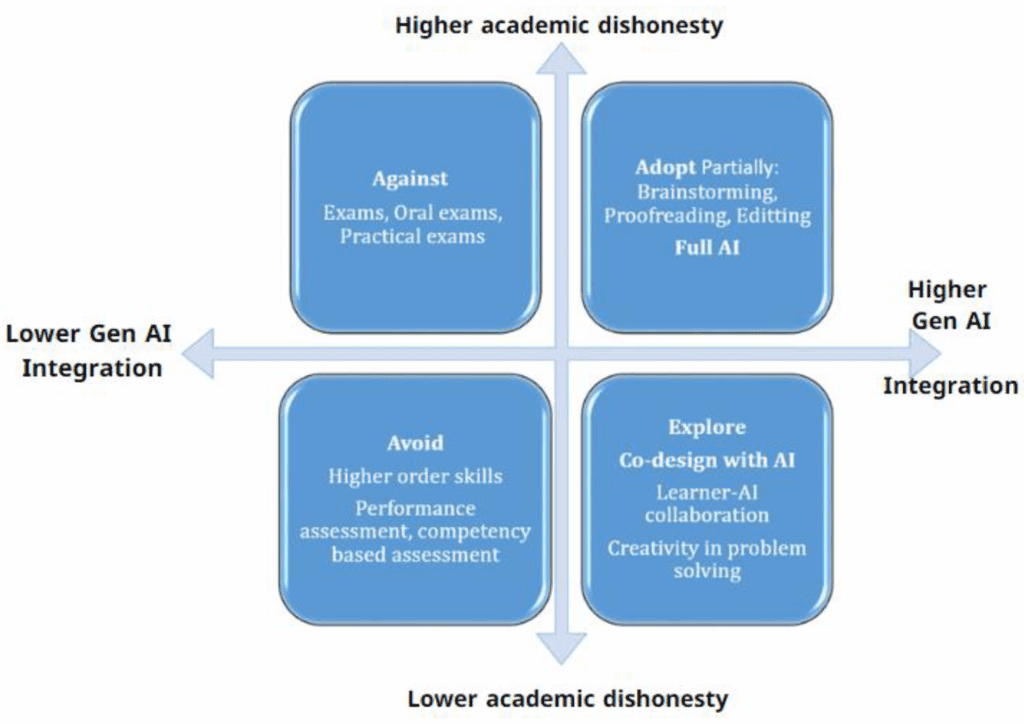

Another common starting point is at the meso (institutional) level. Oftentimes, instructors are bound to the practices and policies established by their institutions. From this point, design decisions are made around GenAI policies, academic integrity, and the use or restriction of GenAI tools. We may define the problem as: ‘How can we maintain academic integrity in writing assessment?’ GenAI integration may fall into the against or adopt quadrants of the AAAE model (Khalif et al., 2025).

‘Good Learning‘

If we are to focus on providing students with the best education, assessment design emerges as the main influence. Assessment that is authentic, fair, and clearly acknowledges GenAI use. This is the most loosely defined of the constraints explored and places significant responsibility on instructors. Instructors would need to have high AI literacy and likely provide a level of personalized assessments given each student’s unique context. We may define the problem as: ‘How can GenAI best support students in their writing assessment?’ Here the instructor must explore the purpose of each assessment and determine if GenAI use is beneficial and then what appropriate use is for the task. GenAI integration could be in any quadrant of the AAAE model (Khalif et al., 2025).

Definition

“There is no end to the number of solutions or approaches to a wicked problem” (Corbin et al., 2025).

This is only the beginning of the questions we could ask about assessing writing in a GenAI world. This problem definition is like a tangled knot, filled with countless threads and possible starting points for unraveling. It’s not until you simply pick a strand and follow where it leads, in the hope that clarity will emerge. Even then, there’s no guarantee that the tangle can ever be fully undone.

We must remember that at the centre is a human (Norman, 2018), interrelated with these various issues. At this very moment, learners and instructors are navigating the complex problem space of GenAI assessment. Many people are experiencing frustration, anxiety, uncertainty, and exhaustion as we search for an elusive solution, yet there is no single, clear problem definition, let alone a single solution. Assessment in the age of GenAI will remain in continuous evolution, shaped by the ongoing pushes and pulls of technological, institutional, and human forces.

Explore the empathy mapping and research divergence on Miro.

References

Brunet, S. & Galarneau, D. (2022). Profile of Canadian graduates at the bachelor level belonging to a group designated as a visible minority, 2014 to 2017 cohorts. Statistics Canada. https://www150.statcan.gc.ca/n1/pub/81-595-m/81-595-m2022003-eng.htm

Corbin, T., Bearman, M., Boud, D., & Dawson, P. (2025). The wicked problem of AI and assessment. Assessment & Evaluation in Higher Education, 0(0), 1–17. https://doi.org/10.1080/02602938.2025.2553340

COU – Council of Ontario Universities (2018). Faculty at Work: The Composition and Activities of Ontario Universities’ Academic Workforce. Available at https://cou.ca/wp-content/uploads/2018/01/Public-Report-on-Faculty-at-Work-Dec-2017-FN.pdf

Delanoy, N. (2025). EduFlow AI: Personalized learning platform case study [Class handout]. Werklund School of Education, University of Calgary. https://d2l.ucalgary.ca/d2l/home/693026

Google. (2025). Gemini [Large language model]. https://gemini.google.com/

Khlaif, Z. N., Alkouk, W. A., Salama, N., & Abu Eideh, B. (2025). Redesigning Assessments for AI-Enhanced Learning: A Framework for Educators in the Generative AI Era. Education Sciences, 15(2), 174. https://doi.org/10.3390/educsci15020174

Nikolic, S., Sandison, C., Haque, R., Daniel, S., Grundy, S., Belkina, M., Lyden, S., Hassan, G. M., & Neal, P. (2024). ChatGPT, Copilot, Gemini, SciSpace and Wolfram versus higher education assessments: An updated multi-institutional study of the academic integrity impacts of Generative Artificial Intelligence (GenAI) on assessment, teaching and learning in engineering. Australasian Journal of Engineering Education, 29(2), 126–153. https://doi.org/10.1080/22054952.2024.2372154

Norman, D. A. (2013). The design of everyday things (Revised and expanded ed). Basic Books.

Norman, D. (2018, August 10). Principles of Human-Centered Design [Video recording]. Nielsen Norman Group. https://www.youtube.com/watch?v=rmM0kRf8Dbk

OpenAI. (2025). ChatGPT-5 [Large language model]. https://chat.openai.com/

Srivastava, S., John, O. P., Gosling, S. D., & Potter, J. (2003). Development of personality in early and middle adulthood: Set like plaster or persistent change? Journal of Personality and Social Psychology, 84(5), 1041–1053. https://doi.org/10.1037/0022-3514.84.5.1041

Statistics Canada. (2024). Table 37-10-0144-03 Proportion of full-time teaching staff at Canadian universities, by gender and academic rank. https://www150.statcan.gc.ca/t1/tbl1/en/tv.action?pid=3710014403

Vital, A. (2018, November 28). Big Five Personality Traits – Infographic. Adioma. https://blog.adioma.com/5-personality-traits-infographic

Prompt Sequence

Image generation: Gemini 2.5 Flash Prompt Chaining:

- Image text prompt creation and iteration

- Text-to-image creation and iteration

Initial prompt:

Can you act as a prompt engineer who is an expert of editing text to be utilized in a text-to-image prompt. I will give you the first draft of a textual prompt, and you will output refined text to best visualize the description. Clarify first.

I want to have a complex, impossible ball of yarn at the centre with three strands shooting off in different directions. it’s a mess. I want each push/pull of the string to represent each factor. one cultural and systemic influences, the other to have institutional/policy influences, and the third to represent the individual and good learning. clarify first

Final prompt:

A contemporary abstract artwork with pop colors and crisp, clean lines. At the center, an impossible, tangled ball of yarn — intricate, chaotic, and geometrically paradoxical, reminiscent of an M.C. Escher structure. Three vividly colored strands shoot outward in different directions, each thread taut, showing the tension of push and pull. The background is a surreal, Escher-inspired classroom: staircases fold into themselves, chalkboards loop in perspective, and desks merge into infinite patterns. Each strand connects to a blend of literal and abstract forms — one evoking cultural and systemic influences, one institutional and policy forces, and one representing individual learning and growth. The mood is chaotic yet playful, balanced between order and disorder, with a sense of dynamic motion and conceptual tension.

GenAI Text-to-Image Bias?

The image depicts a Eurocentric classroom through the artifacts of desks organized into a neat grid, a blackboard, and colourful building blocks. A European castle is utilized likely in effort depict an institution.