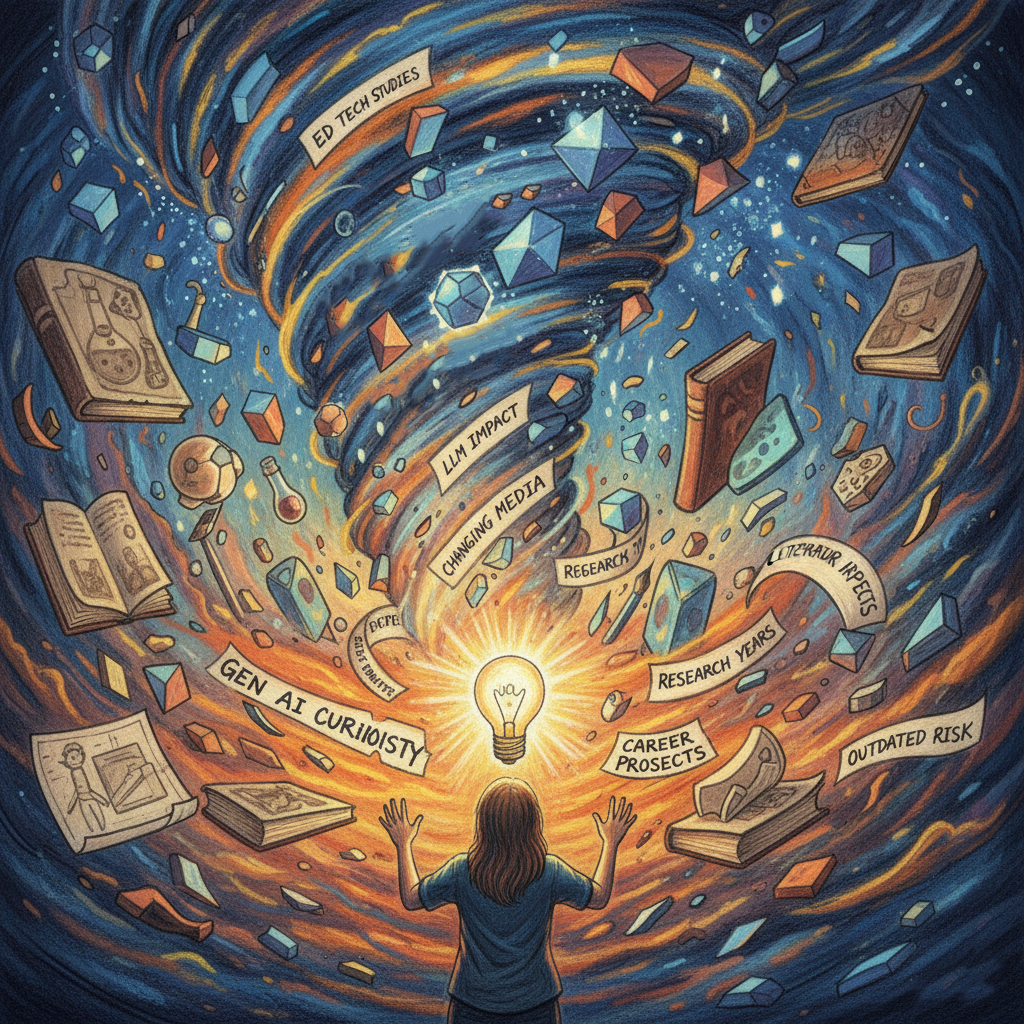

Research, the process of collecting and analyzing information to increase our understanding, is a daunting and wide-ranging undertaking. When there are so many curiosities, interests, and problems in this world, how does one choose where to focus?

In defining my doctoral research direction, I have been pulled by many factors.

- experience in educational technology

- experience with the design process

- my curiosity of generative artificial intelligence

- our changing media landscape due to large language models

- what literature knowledge gaps exist? (yet feels speculative before diving in)

- what do I want to spend years of research on?

- how my research may impact my future career prospects

- the risk of my research being outdated before it is even published (especially within a field moving as rapidly as generative artificial intelligence)

Attempting to juggle these factors felt impossible and I watched my research concept spiral time and time again, yet getting closer to a refined vision. Upon reflection, this is the very process needed, because every step of the way, as painfully confusing as it was in the moment, provided clarity. Creswell (2012, p. 60) offers a methodology to communicate the result of this process, spanning from broad to specific:

Topic

“the broad subject matter addressed by the study” (Creswell, 2012, p. 60)

The area of my research is in the assessment of learning, with particular attention to process-based approaches for evaluating student writing. I’m interested in methods for making learning visible—how can we document and genuinely understand students’ learning? Since educators can’t assess what they cannot see.

Problem

“a general educational issue, concern, or controversy addressed in research that narrows the topic” (Creswell, 2012, p. 60)

Education does not occur in a vacuum. Shifts to the broader media ecology have an impact on the how, the why, and the what we teach and learn. Assessments are a particular thing of concern to education and have recently been disrupted by the proliferation of Large language models (LLMs) which are capable of instantly generating text that is indistinguishable from a human (Nikoli et al., 2024; Suchman, 2023).

This problem can be framed by a variety of theories and frameworks (this is just a small start):

- Sociotechnical systems theory proposes that social, technical, and environmental factors are inherently interdependent, existing in continuous interplay and redesign (Pasmore et al., 2019). A disruptive technological tool, such as an LLM, demands for a rebalancing of the system.

- The TPACK model dictates that a well designed educational experience is made by aligning technological, pedagogical, and content knowledge. Generative artificial intelligence has rapidly added complexity to the technology domain destabilizing this fine balance (Kohen-Vacs, 2024).

- Distributed cognition views technology as an integral component of a system in which competency is the ability to engage with and manage a distributed system of individuals, environments, and tools (Fawns & Schuwith, 2024; Pea, 1993). With this theory, a humans ability to leverage LLMs are viewed as thinking.

Assessment is a focal point of much of the controversy surrounding LLMs. Traditional methods, such as take home essays, are unreliable nor do they assess a human-AI collaboration in writing, which is likely the future (Eaton, 2023).

Purpose

“the major intent or objective of the study used to address the problem” (Creswell, 2012, p. 60)

A lot of the academic literature on the disruptions caused by generative AI highlight assessing end products, such as essays, as a core challenge (eg., Adhikari (2023), Corbin et al. (2025), Finkel-Gates (2025), Gagich, (2025)). These scholars point to process-based assessment as a promising way forward. The purpose of my study is to explore the emerging process-based strategies for writing assessment in our current age of LLMs.

Question

“narrows the purpose into specific questions that the researcher would like answered or addressed in the study” (Creswell, 2012, p. 60)

In exploring process-based writing assessment strategies, I am particularly interested in methods to making the writing process visible.

- How can the process of writing be captured?

- Which strategies are agile to a variety of media ecologies?

- Which strategies can asses a human-AI collaboration in learning?

- What specific contextual factors and considerations shape their use?

References

Adhikari, B. (2023). Thinking beyond Chatbots’ Threat to Education: Visualizations to Elucidate the Writing or Coding Process. Education Sciences, 13(9), 922. https://doi.org/10.3390/educsci13090922

Corbin, T., Dawson, P., & Liu, D. (2025). Talk is cheap: Why structural assessment changes are needed for a time of GenAI. Assessment & Evaluation in Higher Education, 0(0), 1–11. https://doi.org/10.1080/02602938.2025.2503964

Creswell, J. W. (2012). An Introduction to Educational Research. In Educational research: Planning, conducting, and evaluating quantitative and qualitative research (Fourth Edition). Pearson Education, Inc.

Eaton, S. E. (2023). Postplagiarism: Transdisciplinary ethics and integrity in the age of artificial intelligence and neurotechnology. International Journal for Educational Integrity, 19(1), 23, s40979-023-00144–1. https://doi.org/10.1007/s40979-023-00144-1

Fawns, T., & Schuwirth, L. (2024). Rethinking the value proposition of assessment at a time of rapid development in generative artificial intelligence. Medical Education, 58(1), 14–16. https://doi.org/10.1111/medu.15259

Finkel-Gates, A. (2025). ChatGPT in academic assessments: Upholding integrity. Journal of Learning Development in Higher Education, 36. https://doi.org/10.47408/jldhe.vi36.1491

Gagich, M. (2025). Using Process-Based Pedagogical Assessment Practices to Challenge Generative AI in the Writing Classroom: In P. W. Wachira, X. Liu, & S. Koc (Eds.), Advances in Educational Marketing, Administration, and Leadership (pp. 1–32). IGI Global. https://doi.org/10.4018/979-8-3693-6351-5.ch001

Kohen-Vacs, D., Amzalag, M., Weigelt-Marom, H., Gal, L., Kahana, O., Raz-Fogel, N., Ben-Aharon, O., Reznik, N., Elnekave, M., & Usher, M. (2024). Towards a call for transformative practices in academia enhanced by generative AI. European Journal of Open, Distance and E-Learning, 26(s1), 35–50. https://doi.org/10.2478/eurodl-2024-0006

Nikolic, S., Sandison, C., Haque, R., Daniel, S., Grundy, S., Belkina, M., Lyden, S., Hassan, G. M., & Neal, P. (2024). ChatGPT, Copilot, Gemini, SciSpace and Wolfram versus higher education assessments: An updated multi-institutional study of the academic integrity impacts of Generative Artificial Intelligence (GenAI) on assessment, teaching and learning in engineering. Australasian Journal of Engineering Education, 29(2), 126–153. https://doi.org/10.1080/22054952.2024.2372154

Pasmore, W., Winby, S., Mohrman, S. A., & Vanasse, R. (2019). Reflections: Sociotechnical Systems Design and Organization Change. Journal of Change Management, 19(2), 67–85. https://doi.org/10.1080/14697017.2018.1553761

Pea, R. D. (1993). Practices of distributed intelligence and designs for education. In G. Salomon (Ed.), Distributed cognitions: Psychological and educational considerations (pp. 47–87). Cambridge University Press.

Suchman, L. (2023). The uncontroversial ‘thingness’ of AI. Big Data & Society, 10(2), 20539517231206794. https://doi.org/10.1177/20539517231206794